While chiplets have been around for years, their usage was traditionally limited to details, customized applications. Today, nevertheless, they are at the center of technology, powering millions of desktop PCs, workstations, servers, gaming consoles, phones, and also wearable devices worldwide.

In an issue of a couple of years, most leading chipmakers have welcomed chiplet technology to drive innovation. It’s currently clear that chiplets are poised to become the market criterion. Let’s explore what makes them so considerable and exactly how they’re forming the future of modern technology.

TL; DR: What Are Chiplets?

Chiplets are segmented cpus. As opposed to consolidating every component into a solitary chip (called a monolithic strategy), certain areas are manufactured as different chips. These specific chips are then placed with each other right into a solitary package using an intricate link system.

This plan enables the parts that can take advantage of the most up to date fabrication methods to be shrunk in dimension, improving the effectiveness of the procedure and permitting them to suit more components.

The components of the chip that can not be considerably lowered or do not require reduction can be produced making use of older and extra economical techniques.

While the procedure of making such processors is complex, the total expense is generally lower. Additionally, it supplies cpu business a more convenient pathway to expand their item array.

Silicon Scientific research

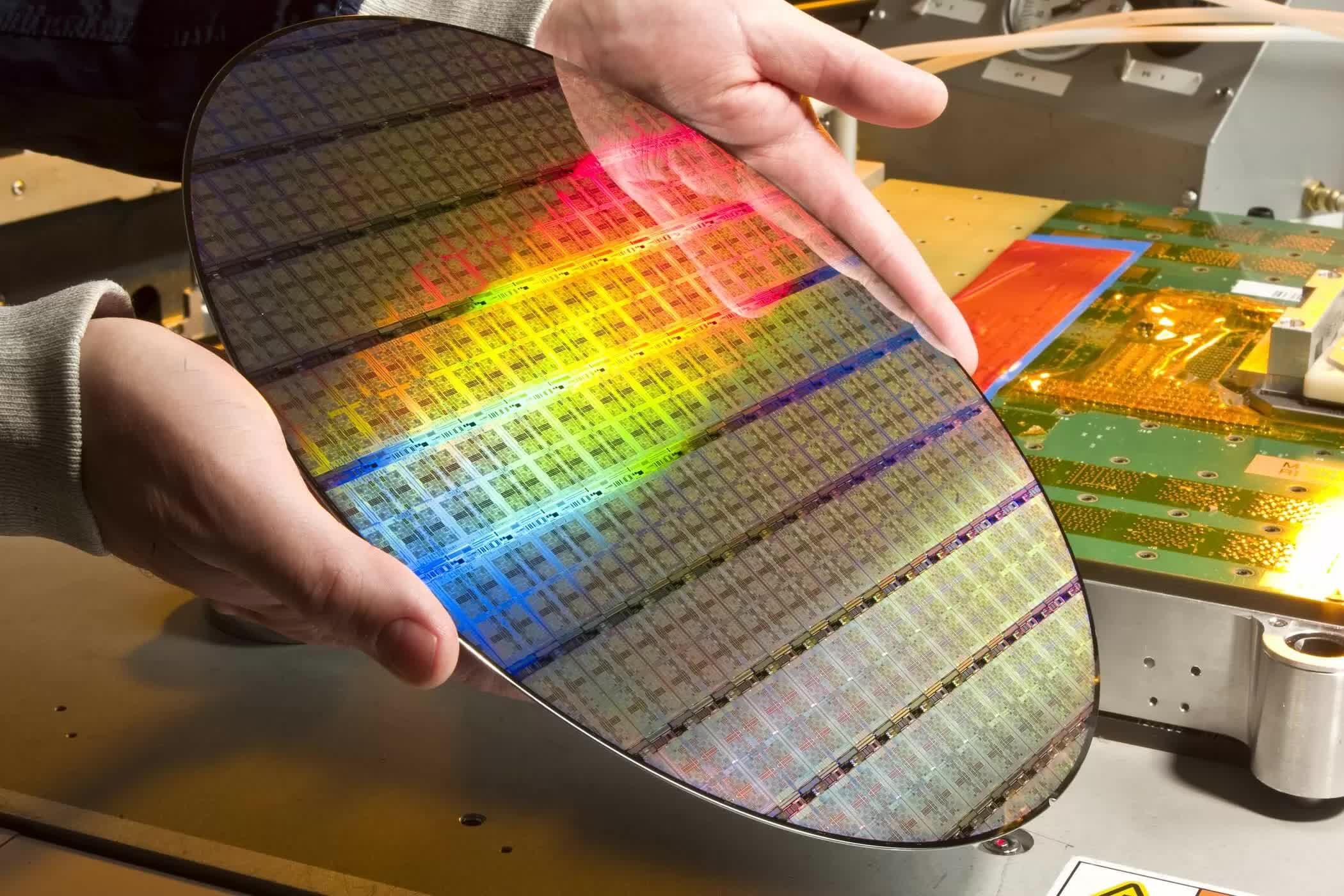

To totally understand why processor suppliers have transformed to chiplets, we need to first look into exactly how these gadgets are made. CPUs and GPUs start their life as big discs made of ultra-pure silicon, normally a little under 12 inches (300 mm) in diameter and 0. 04 inches (1 mm) thick.

This silicon wafer undergoes a sequence of elaborate actions, resulting in multiple layers of various products– insulators, dielectrics, and metals. These layers’ patterns are created via a process called photolithography , where ultraviolet light is beamed through a bigger version of the pattern (a mask), and ultimately reduced via lenses to the required dimension.

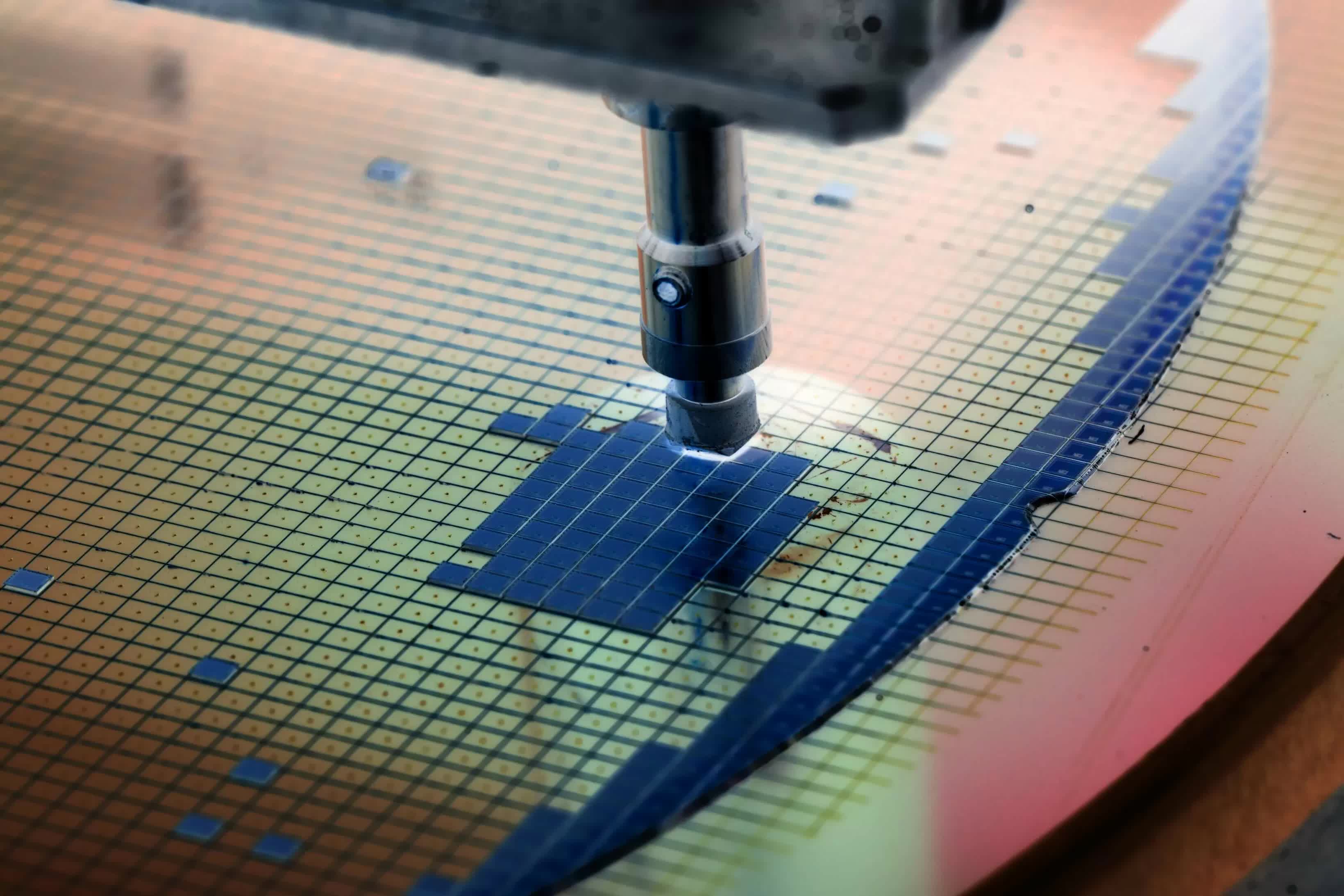

The pattern obtains duplicated, at established intervals, across the surface area of the wafer and each of these will inevitably end up being a cpu. Considering that chips are rectangular and wafers are circular, the patterns must overlap the disc’s boundary. These overlapping components are ultimately disposed of as they are non-functional.

When finished, the wafer is examined making use of a probe related to each chip. The electric evaluation results notify engineers about the processor’s quality versus a lengthy list of requirements. This initial phase, referred to as chip binning, helps determine the processor’s “grade.”

As an example, if the chip is planned to be a CPU, every component needs to function appropriately, running within a set range of clock speeds at a certain voltage. Each wafer area is then categorized based on these examination results.

Upon completion, the wafer is reduced into individual pieces, or “dies,” that are viable for usage. These passes away are then installed onto a substrate, similar to a specialized motherboard. The cpu undergoes additional packaging (for example, with a warmth spreader) before it’s ready for circulation.

The entire sequence can take weeks of manufacturing and firms such as TSMC and Samsung bill high costs for each wafer, anywhere in between $ 3, 000 and $ 20, 000 relying on the procedure node being utilized.

“Refine node” is the term utilized to explain the entire fabrication system. Historically, they were named after the transistor’s entrance size. Nevertheless, as making technology enhanced and permitted ever-smaller components, the classification no longer followed any kind of physical aspect of the die and now it’s just a marketing device.

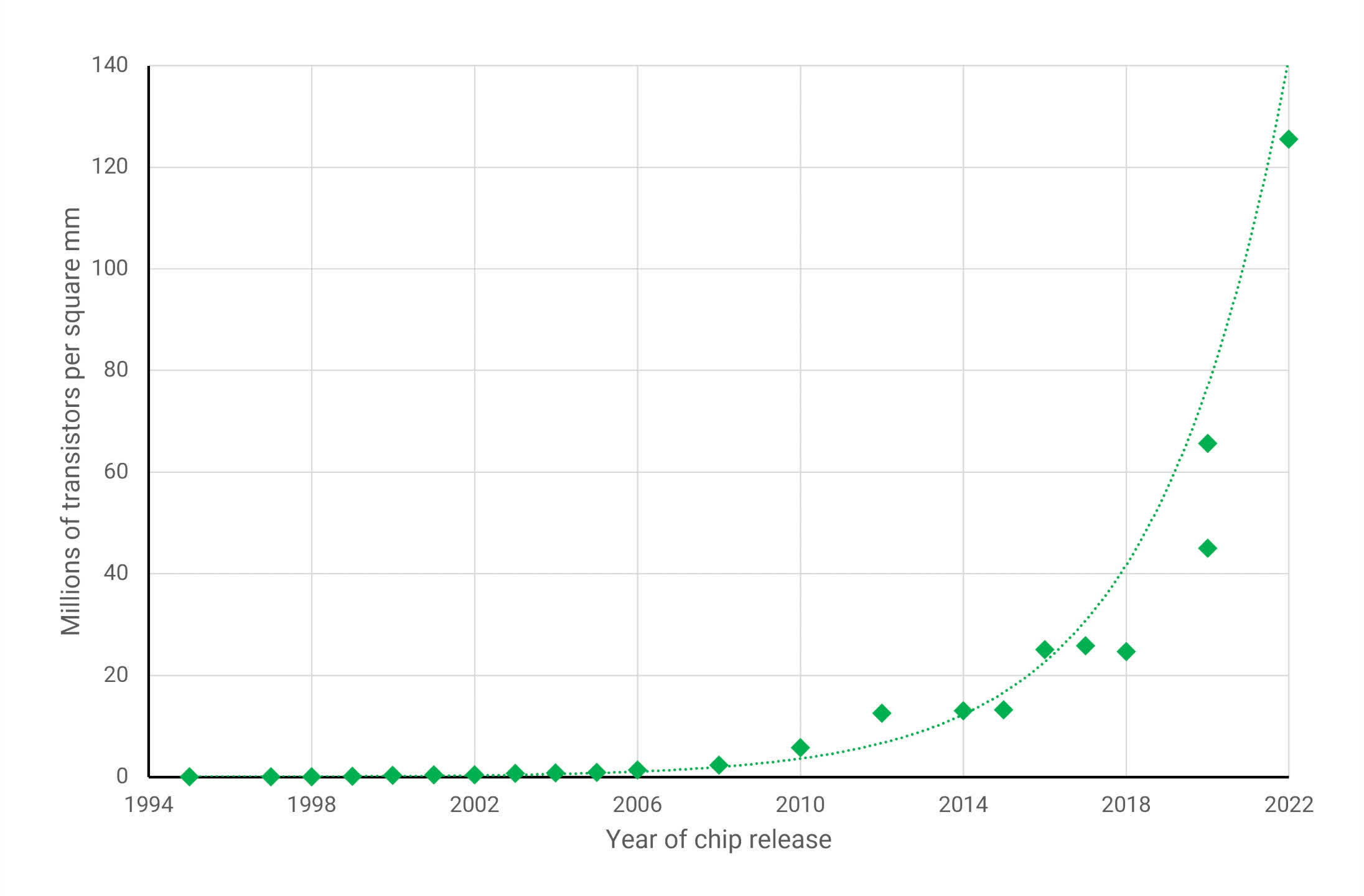

However, each brand-new procedure node brings advantages over its precursor. It may be more affordable to create, consume much less power at the very same clock rate (or vice versa), or have a greater density. The latter statistics steps how many elements can fit within an offered die area. In the graph listed below, you can see exactly how this has developed throughout the years for GPUs (the largest and most complex chips you’ll find in a COMPUTER)…

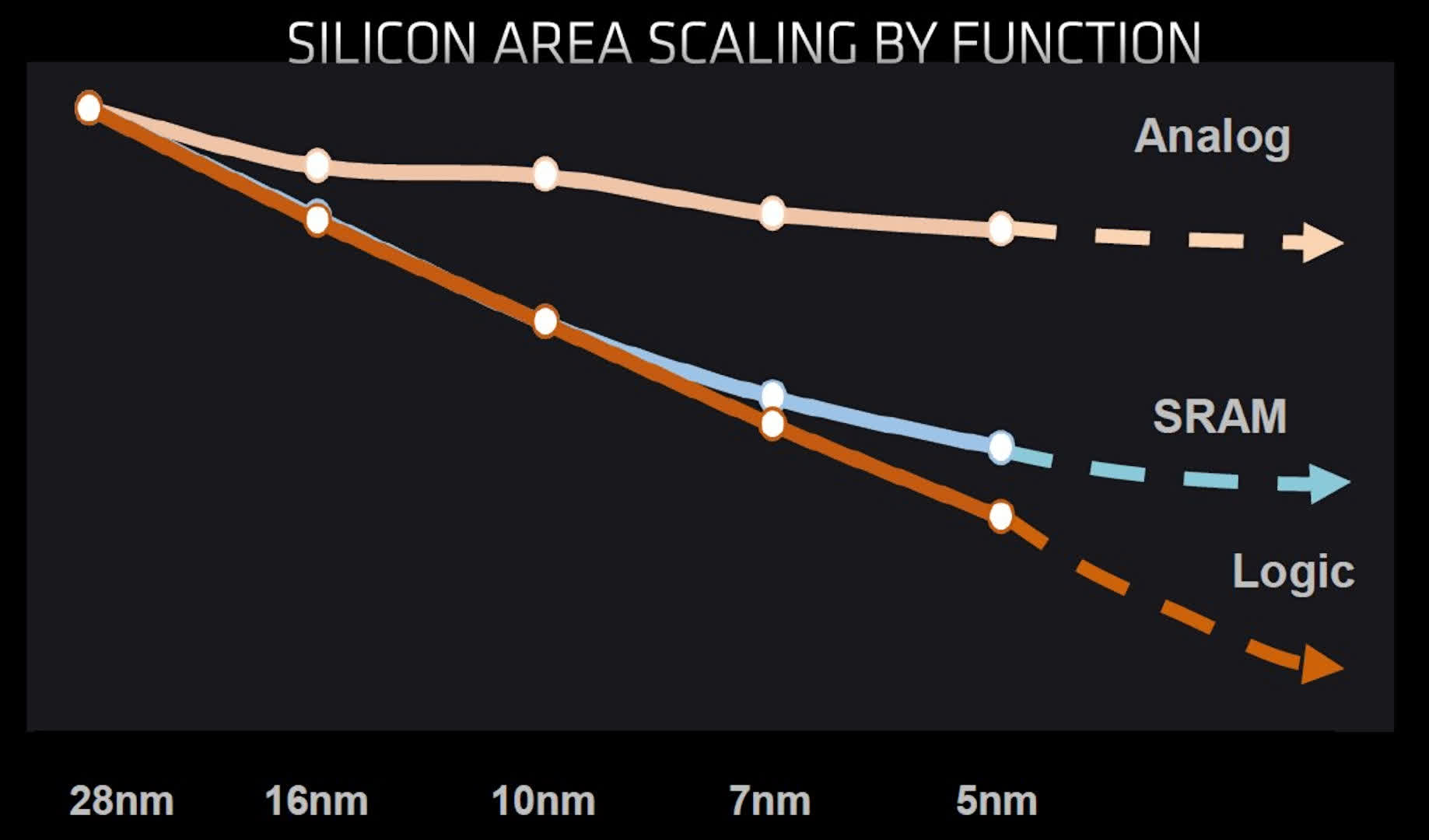

The enhancements in procedure nodes offer a way for engineers to enhance the capacities and efficiency of their items, without having to make use of huge and expensive chips. Nevertheless, the above chart only informs component of the story, as not every facet of a processor can benefit from these advancements.

Circuits inside chips can be assigned into among the complying with wide classifications:

- Reasoning– handles information, math, and decision-making

- Memory– usually SRAM, which stores data for the reasoning

- Analog– circuits that handle signals between the chip and other gadgets

However, while logic circuits remain to diminish with every significant step forward in procedure node technology, analog circuits have actually barely transformed and SRAM is starting to reach a restriction also.

While logic still creates the largest part of the die, the quantity of SRAM in today’s CPUs and GPUs has actually dramatically grown in the last few years. As an example, AMD’s Vega 20 chip used in its Radeon VII graphics card (2019, included a mixed overall of 5 Megabytes of L 1 and L 2 cache. Simply 2 GPU generations later, the Navi 21 chip powering the Radeon RX 6000 series (2020, consisted of over 130 Megabytes of consolidated cache– an exceptional 25 -fold increase.

We can anticipate these to continue to increase as new generations of processors are developed, but with memory not scaling down in addition to the reasoning, it will certainly become significantly much less cost-effective to make all of the circuitry on the same process node.

In a perfect globe, one would certainly make a die where analog sections are fabricated on the biggest and cheapest node, SRAM components on a much smaller sized one, and logic reserved for the absolute cutting-edge innovation. Sadly, this is not practically achievable. Nevertheless, there exists an alternative technique.

Split and Conquer

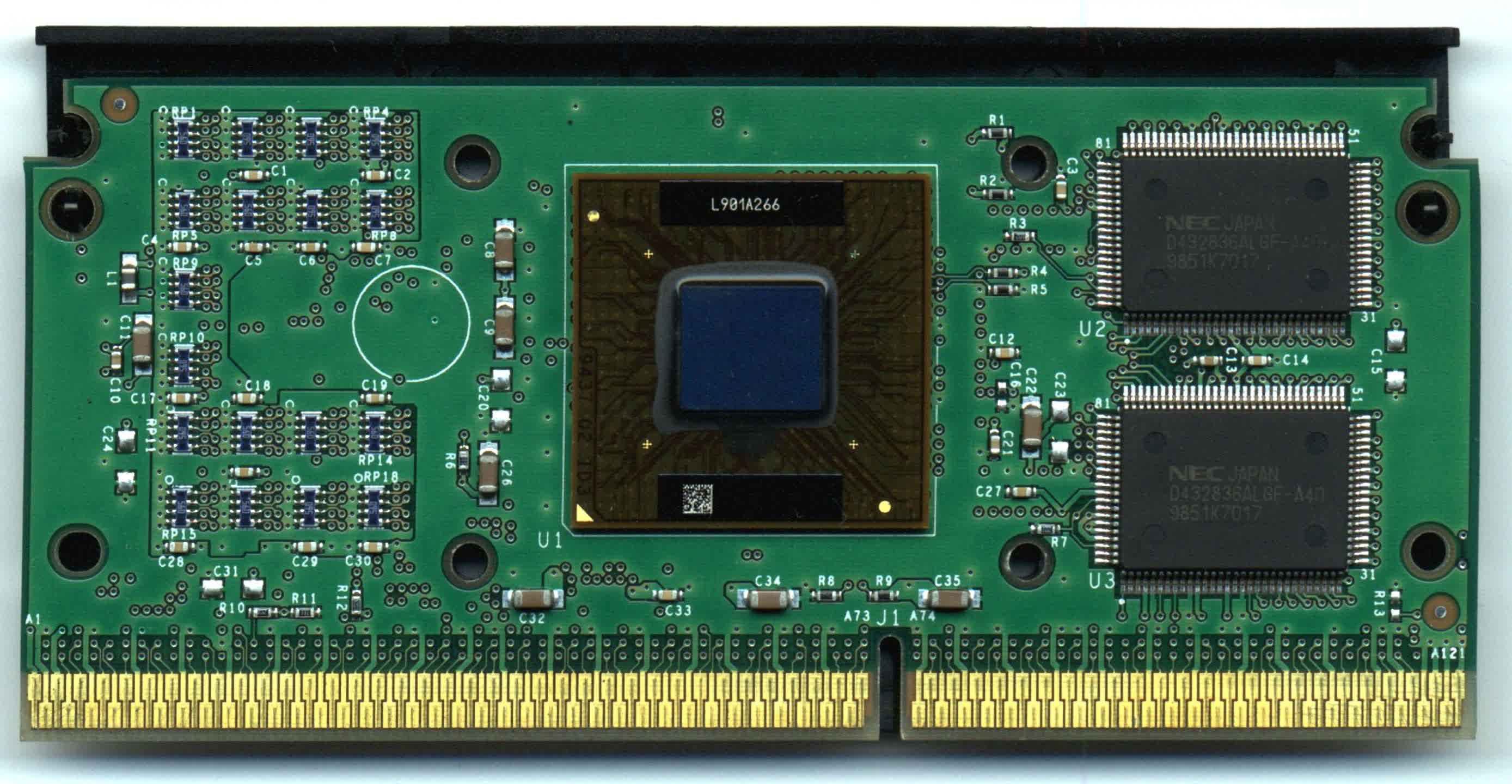

In 1995, Intel presented the Pentium II, a follower to its original P 5 processor. What collection it in addition to various other processors at the time was the layout concealed underneath its plastic shield: a motherboard real estate 2 chips. The major chip included all the processing reasoning and analog systems, while one or two separate SRAM modules acted as Level 2 cache.

While Intel made the primary chip, the cache was sourced from exterior providers. This strategy came to be relatively basic for desktop computer PCs in the mid-to-late 1990 s, till advancements in semiconductor fabrication allowed logic, memory, and analog systems to be fully integrated into a single die.

While Intel remained to mess around with several contribute the very same plan, it mainly stuck to the supposed monolithic method for processors– i.e., one chip for whatever. For a lot of cpus, there was no demand for more than one die, as manufacturing strategies excelled (and affordable) sufficient to keep it simple.

Nonetheless, other business were more thinking about following a multi-chip method, most especially IBM. In 2004, it was possible to buy an 8 -chip variation of the POWER 4 web server CPU that made up 4 cpus and four cache modules, all placed within the same body (called a multi-chip component or MCM technique).

Around this moment, the term “heterogeneous assimilation” begun to show up, partly as a result of research study work done by DARPA. Heterogeneous combination intends to separate the different sections of a processing system, produce them independently on nodes finest fit for each, and afterwards integrate them right into the same plan.

Today, this is much better called system-in-package (SiP) and has been the criterion method for outfitting smartwatches with chips from their beginning. For example, the Series 1 Apple Watch houses a CPU, some DRAM and NAND Flash, several controllers, and other parts within a single structure.

A comparable configuration can be achieved by having different systems all on a single die (referred to as an SoC or system-on-a-chip Nonetheless, this strategy doesn’t permit making use of different node rates, neither can every component be manufactured by doing this.

For a modern technology vendor, using heterogeneous combination for a particular niche item is something, but employing it for most of their profile is one more. This is precisely what AMD did with its variety of cpus. In 2017, the semiconductor huge presented its Zen design with the launch of the single-die Ryzen desktop computer CPU. Just a couple of months later on, AMD debuted 2 multi-chip product lines: Threadripper and EPYC, with the last including configurations of up to 4 dies.

With the launch of Zen 2 2 years later on, AMD completely welcomed hi there, MCM, SiP– call it what you will. They moved the majority of the analog systems out of the cpu and placed them right into a separate die. These were made on an easier, less expensive procedure node, while a more advanced one was utilized for the remaining logic and cache.

And so, chiplets ended up being the buzzword of selection.

Smaller is Much better

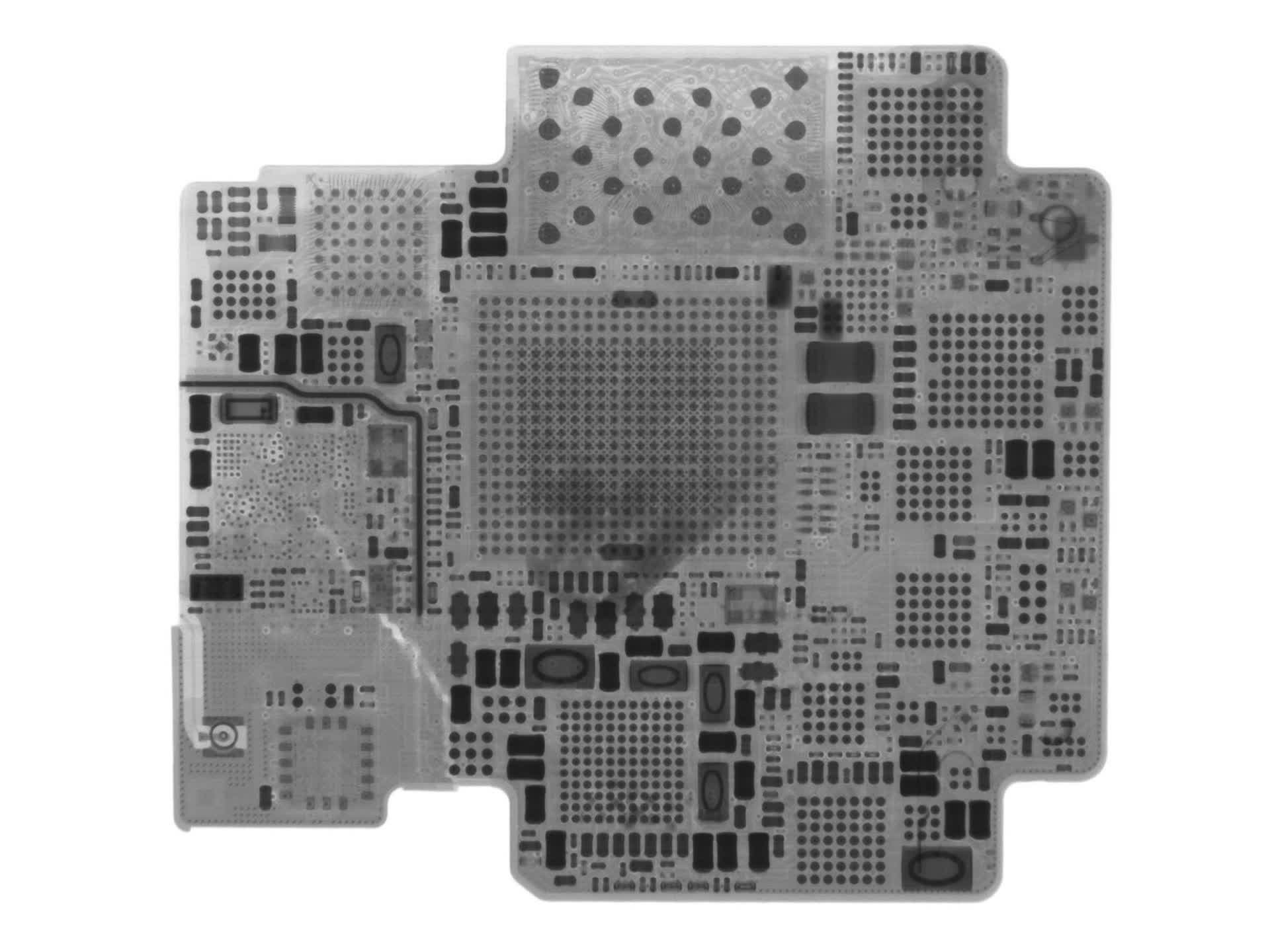

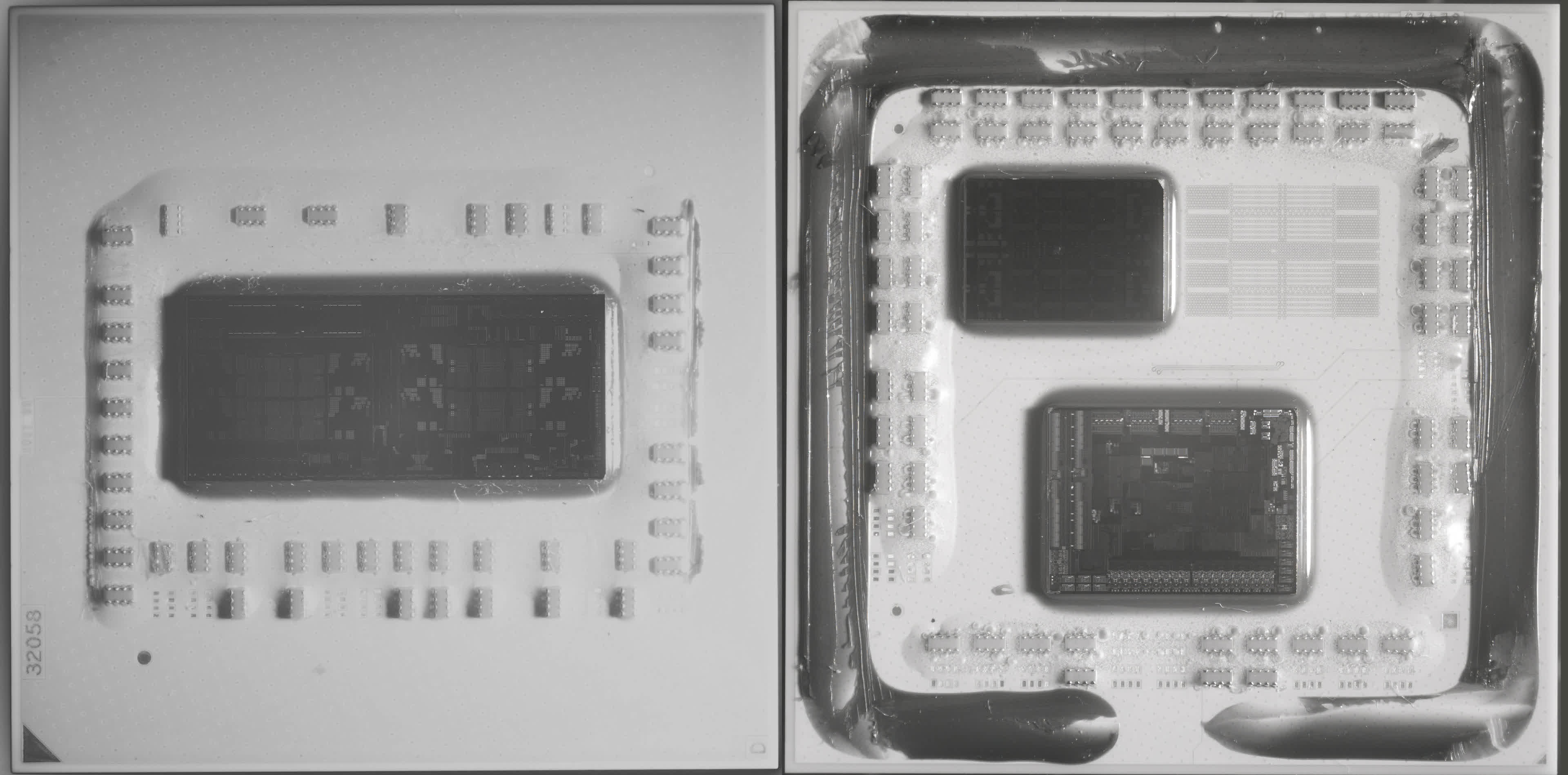

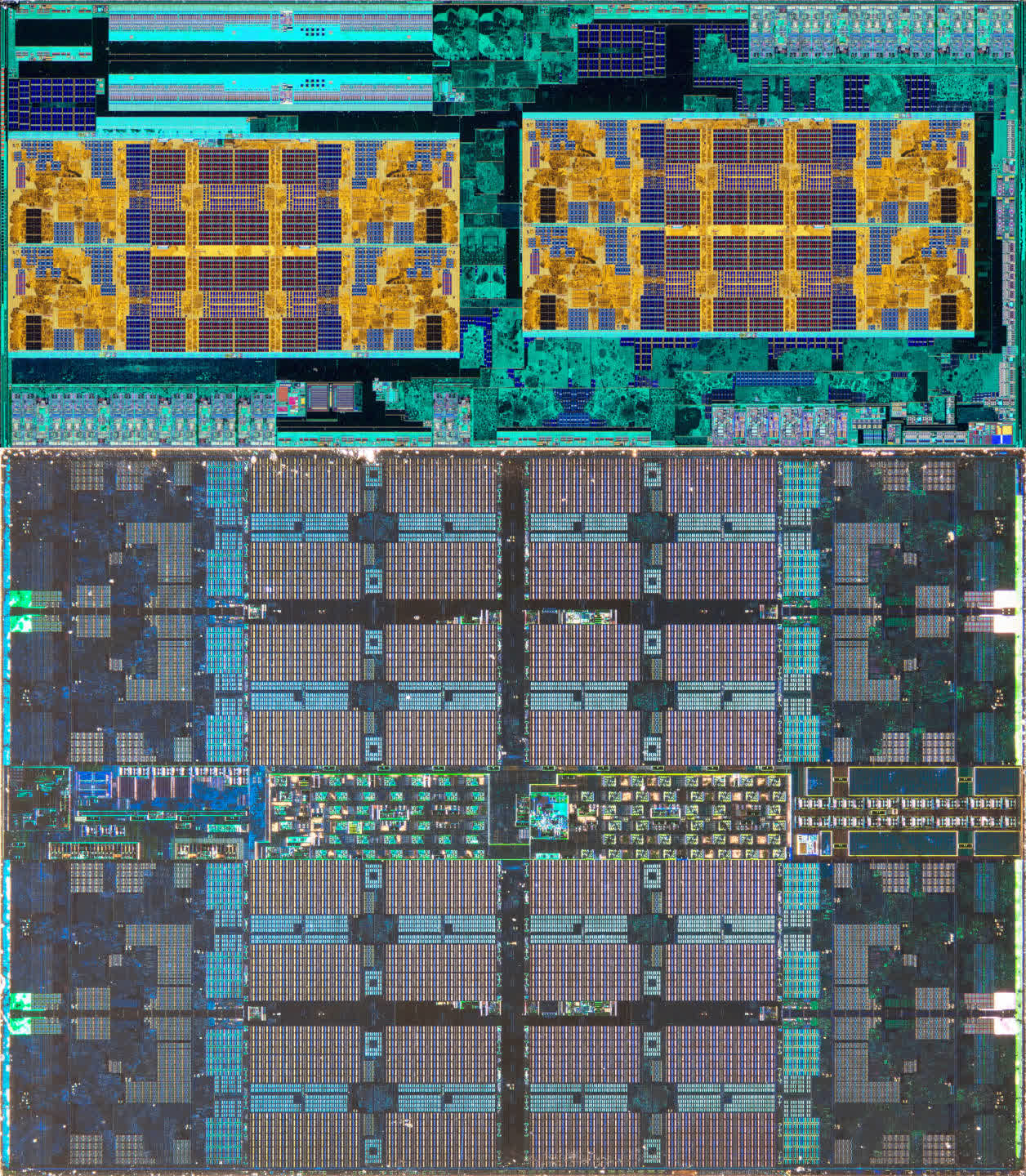

To comprehend precisely why AMD picked this instructions, let’s examine the image listed below. It showcases 2 older CPUs from the Ryzen 5 collection– the 2600 on the left, employing the so-called Zen+ design, and the Zen 2 -powered 3600 on the right.

The heat spreaders on both designs have been gotten rid of, and the pictures were taken using an infrared electronic camera. The 2600’s single die homes 8 cores, though 2 of them are handicapped for this particular version.

This is likewise the situation for the 3600, however right here we can see that there are 2 passes away in the bundle– the Core Complicated Pass Away (CCD) at the top, real estate the cores and cache, and the Input/Output Pass Away (IOD) at the bottom including all the controllers (for memory, PCI Express, USB, and so on) and physical user interfaces.

Given That both Ryzen CPUs fit into the very same motherboard outlet, both images are essentially to scale. On the surface, it may appear that both passes away in the 3600 have a bigger combined location than the single contribute the 2600, however appearances can be tricking.

If we straight compare the chips containing the cores, it’s clear just how much room in the older design is occupied by analog circuitry– it’s all the green shades bordering the gold-colored cores and cache. Nevertheless, in the Zen 2 CCD, very little die area is dedicated to analog systems; it’s nearly entirely composed of reasoning and SRAM.

The Zen+ chip has a location of 213 mm ² and was manufactured by GlobalFoundries utilizing its 12 nm process node. For Zen 2, AMD maintained GlobalFoundries’ solutions for the 125 mm ² IOD but made use of TSMC’s premium N 7 node for the 73 mm ² CCD.

The combined location of the chips in the newer version is smaller, and it additionally flaunts two times as much L 3 cache, sustaining faster memory and PCI Express. The very best part of the chiplet method, however, was that the small size of the CCD made it possible for AMD to fit one more one right into the bundle. This development brought to life the Ryzen 9 collection, using 12 and 16 -core models for desktop Computers.

Even much better, by utilizing two smaller sized chips as opposed to one large one, each wafer can possibly yield more passes away. When it comes to the Zen 2 CCD, a single 12 -inch (300 mm) wafer can produce up to 85 % more passes away than for the Zen+ design.

The smaller the slice one secures of a wafer, the less most likely one is mosting likely to discover manufacturing issues (as they often tend to be randomly dispersed across the disc), so taking every one of this right into account, the chiplet technique not only offered AMD the capacity to increase its profile, it did so far more cost-effectively– the exact same CCDs can be made use of in numerous versions and each wafer creates thousands of them!

Yet if this design choice is so helpful, why isn’t Intel doing it? Why aren’t we seeing it being made use of in other processors, like GPUs?

Adhering to the Lead

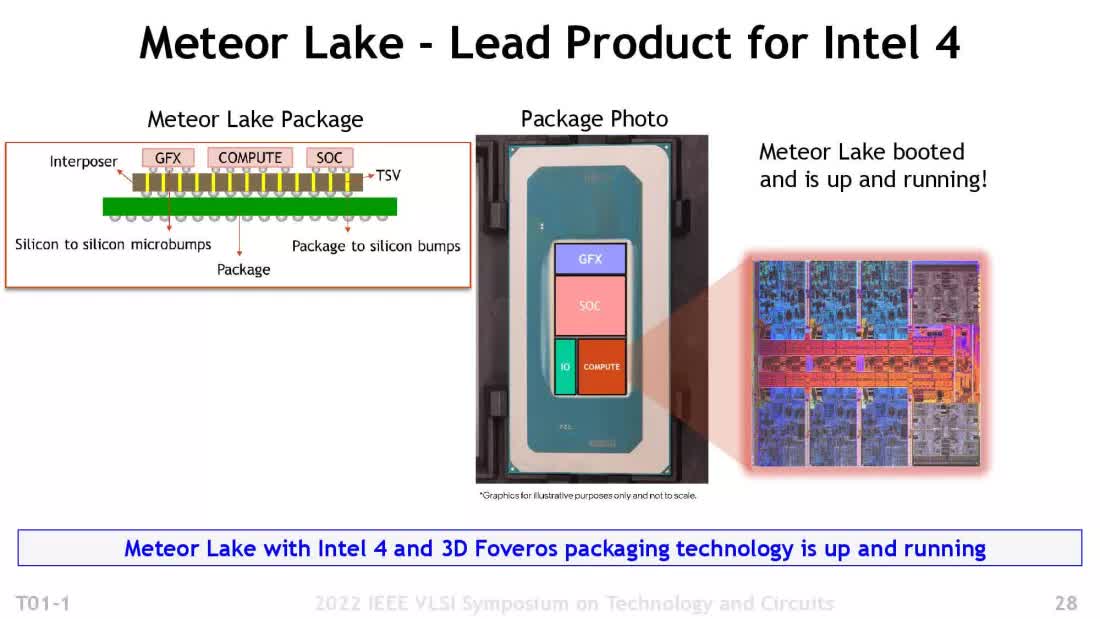

To attend to the first question, Intel has been gradually adopting chiplet innovation as well. The first customer CPU design they delivered making use of chiplets is called Meteor Lake. Intel’s strategy is rather one-of-a-kind though, so allow’s explore just how it varies from AMD’s strategy.

Utilizing the term tiles rather than chiplets, this generation of cpus divided the previously monolithic layout right into four different chips:

- Calculate ceramic tile: Contains all of the cores and L 2 cache

- GFX floor tile: Homes the integrated GPU

- SoC ceramic tile: Incorporates L 3 cache, PCI Express, and other controllers

- IO ceramic tile: Fits the physical user interfaces for memory and various other tools

High-speed, low-latency connections are present between the SoC and the other three tiles, and all of them are linked to another die, called an interposer This interposer delivers power to each chip and contains the traces between them. The interposer and four ceramic tiles are then placed onto an added board to allow the entire assembly to be packaged.

Unlike Intel, AMD does not make use of any unique installing die however has its own one-of-a-kind link system, called Infinity Fabric, to take care of chiplet information purchases. Power shipment runs through a rather standard package, and AMD also uses less chiplets. So why is Intel’s layout therefore?

One difficulty with AMD’s approach is that it’s not really appropriate for the ultra-mobile, low-power industry. This is why AMD still uses monolithic CPUs for that segment. Intel’s design allows them to blend and match different ceramic tiles to fit a specific requirement. For example, spending plan models for budget-friendly laptops can make use of a lot smaller sized floor tiles everywhere, while AMD just has one dimension chiplet for every objective.

The drawback to Intel’s system is that it’s intricate and pricey to create (which has actually resulted in various kind of concerns). Both CPU firms, however, are totally dedicated to the chiplet principle. Once every component of the manufacturing chain is crafted around it, expenses need to decrease.

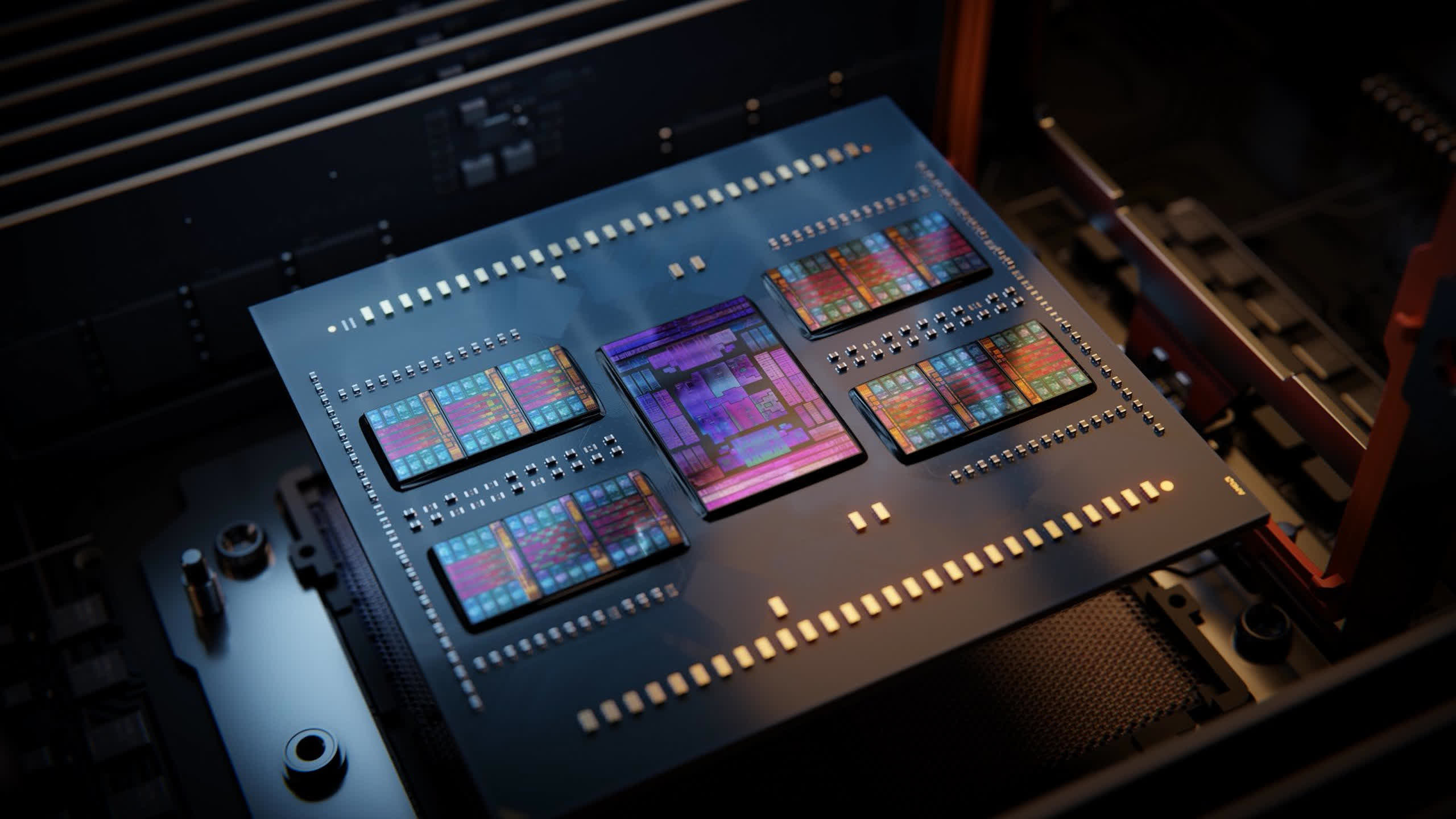

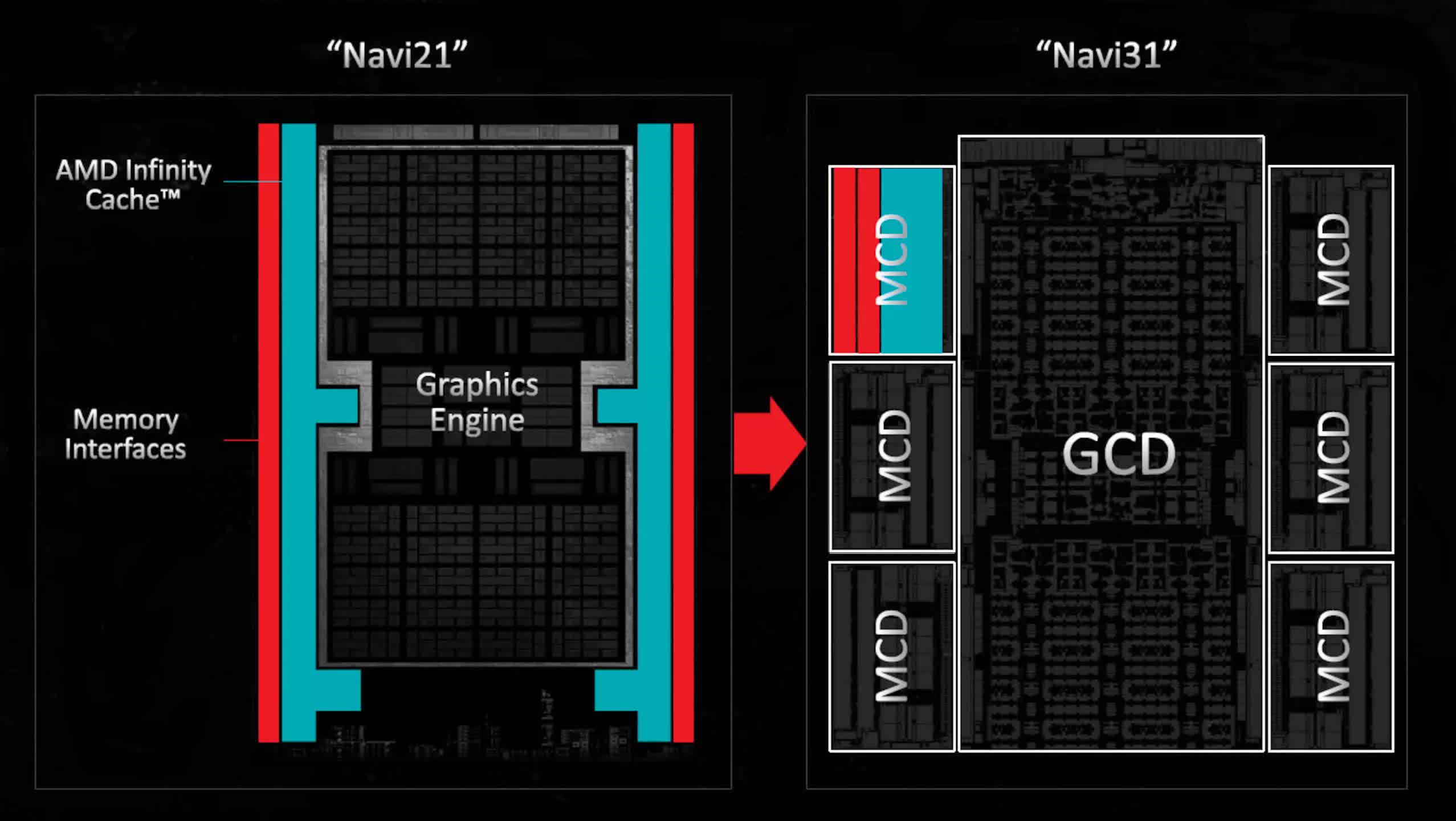

When it comes to GPUs, they contain relatively little analog wiring contrasted to the rest of the die. However, the quantity of SRAM inside has actually been continuously raising. This fad motivated AMD to take advantage of its chiplet proficiency in the Radeon 7000 series, with the Radeon RX 7900 GPUs featuring a multi-die style. These GPUs consist of a single huge need the cores and L 2 cache, together with five or 6 smaller passes away, each including a slice of L 3 cache and a memory controller.

By moving these elements out of the primary die, designers had the ability to dramatically enhance the quantity of reasoning without relying on the current, most pricey procedure nodes to keep chip sizes manageable. While this development likely helped reduce general costs, it did not considerably broaden the breadth of AMD’s graphics profile.

Currently, Nvidia and Intel customer GPUs are showing no indicators of adopting AMD’s chiplet method. Both business rely upon TSMC for all producing duties and appear material to generate incredibly huge chips, passing the cost onto consumers.

That stated, it is recognized that both are actively exploring and applying chiplet-based architectures in a few of their GPU styles. For instance, Nvidia’s Blackwell information center GPUs utilize a chiplet layout including 2 huge passes away attached using a high-speed interlink with the ability of 10 terabytes per 2nd, efficiently functioning as a single GPU.

Getting ‘Moore’ with Chiplets

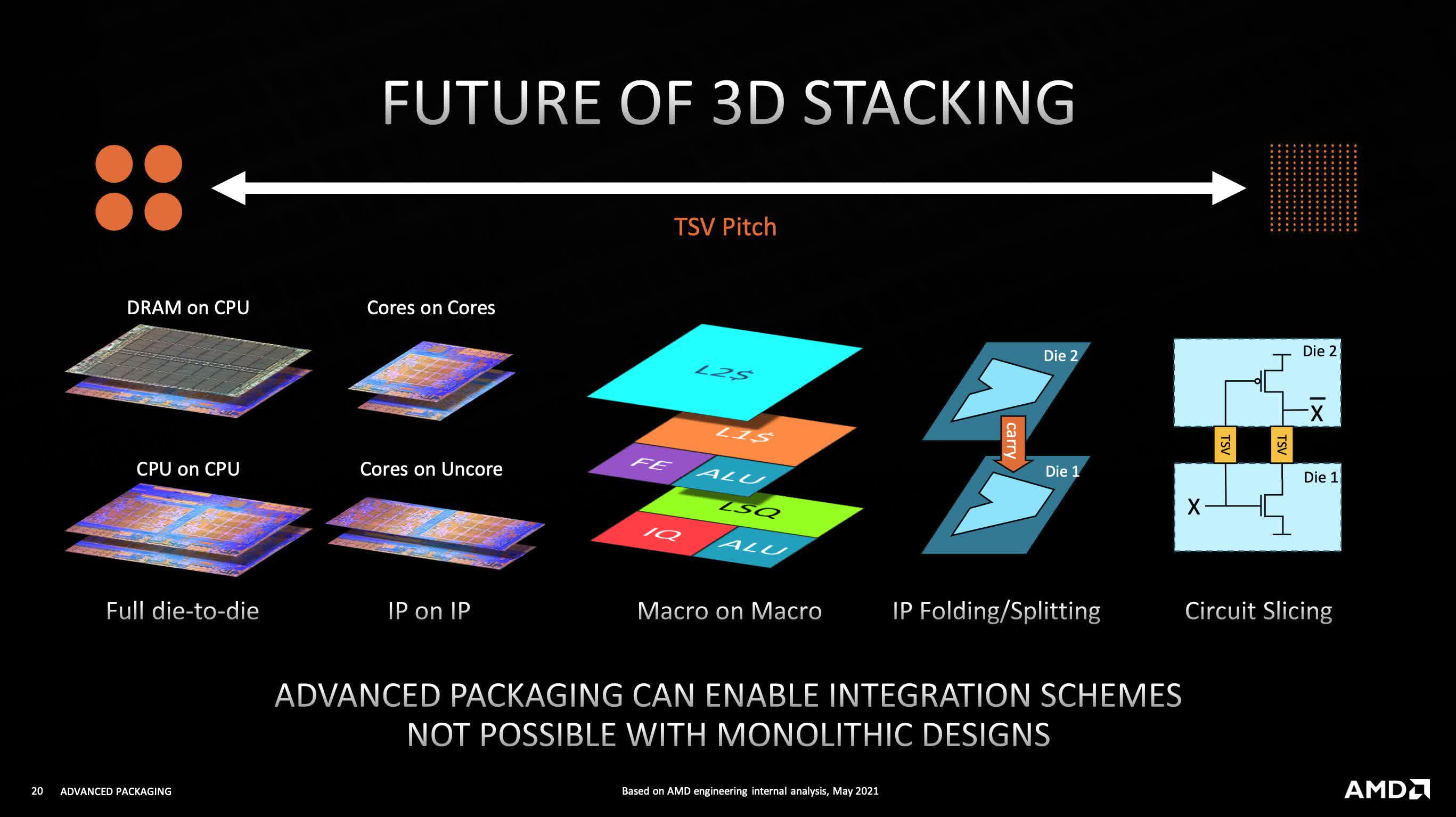

No matter when these modifications occur, the basic truth is that they needs to happen. Despite the tremendous technical advances in semiconductor manufacturing, there is a precise restriction to just how much each component can be diminished.

To proceed improving chip efficiency, engineers essentially have two methods– add even more logic, with the required memory to support it, and boost body clock rates. Regarding the last, the typical CPU hasn’t considerably altered in this aspect for several years. AMD’s FX- 9590 processor, from 2013, could get to 5 GHz in specific workloads, while the highest clock rate in its current models is 5 7 GHz (with the Ryzen 9 9950 X).

Intel’s highest-clocked customer CPU is the Core i 9 – 14900 KS, featuring a maximum turbo frequency of 6 2 GHz on 2 cores. This “scandal sheet” processor holds the record for the fastest out-of-the-box clock speed among desktop computer CPUs.

However, what has transformed is the quantity of circuitry and SRAM. The previously mentioned AMD FX- 9590 had 8 cores (and 8 threads) and 8 MB of L 3 cache, whereas the 9950 X boasts 16 cores, 32 strings, and 64 Megabytes of L 3 cache. Intel’s CPUs have actually in a similar way expanded in terms of cores and SRAM.

Nvidia’s first linked shader GPU, the G 80 from 2006, contained 681 million transistors, 128 cores, and 96 kB of L 2 cache in a chip gauging 484 mm 2 in area. Quick forward to 2022, when the AD 102 was released, and it currently comprises 76 3 billion transistors, 18, 432 cores, and 98, 304 kB of L 2 cache within 608 mm 2 of die location.

In 1965, Fairchild Semiconductor founder Gordon Moore observed that in the very early years of chip production, the density of parts inside a die was doubling yearly for a repaired minimum manufacturing cost. This observation came to be referred to as Moore’s Regulation and was later on translated to indicate “the number of transistors in a chip increases every two years”, based on making patterns.

Moore’s Regulation has actually served as a reasonably exact representation of the semiconductor sector’s progress for almost six decades. The tremendous gains in logic and memory in both CPUs and GPUs have greatly been driven by continuous enhancements in procedure nodes, with parts becoming gradually smaller sized with time. Nevertheless, this fad can not can’t continue for life, regardless of what brand-new innovation comes about.

As opposed to waiting on these physical restrictions to be reached, companies like AMD and Intel have actually embraced chiplet technology, checking out innovative ways to incorporate these modular parts to sustain the development of significantly effective processors.

Decades in the future, the typical PC may be home to CPUs and GPUs the size of your hand. Yet, peel the warmth spreader and you’ll find a host of tiny chips– not three or four, but dozens of them, all ingeniously tiled and stacked together. The dominance of the chiplet has only just begun.